Creative professionals are increasingly being asked to learn how to prompt systems using text, questions, or even coding to cajole artificial intelligence to conjure concepts. Sidelining traditional tools, digital or physical, the process can sometimes feel like navigating a room you’re acquainted with, but with the lights flickering or even completely turned off. But what if you could interact with a generative AI system on a much more human level, one that reintroduces the tactile into the process? Zhaodi Feng’s Promptac combines the “prompt” with the “tactile” in name and in practice with an intriguing interface using human sensations that don’t feel quite so…artificial.

These pieces may look like unusual pieces of pastel-hued pasta, but attached to an RFID sensor, each part operates as a tactile interface component, allowing users to manipulate generated virtual objects by sampling colors or textures, applying pressure and movement.

Designed for the Royal College of Art Graduate Show in London, the exposed wires of Feng’s device may communicate a science experiment vibe. But watching the Arduino-powered concept in use, the system’s potential seems immediately applicable. Promptac illustrates how designers across a multitude of disciplines may one day be able to alter colors, textures, and materials dynamically onto virtual objects created by generative AI with a degree of physicality currently absent.

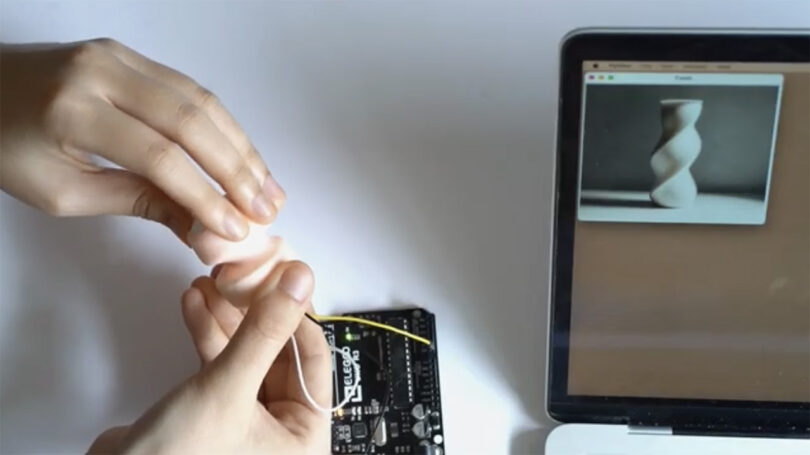

During the tech demo Feng uses the Promptac to physically manipulate a virtual vase, twisting the object into a spiral using a tactile rubbery knob interface.

The design process with Promptac begins much like any other natural language AI prompting system, starting with a carefully worded request to produce a desired object to use as a canvas/model. From there the generated image’s color, shape, size, and perceived texture can be manipulated using a variety of oddly-shaped “hand manipulation sensors.”

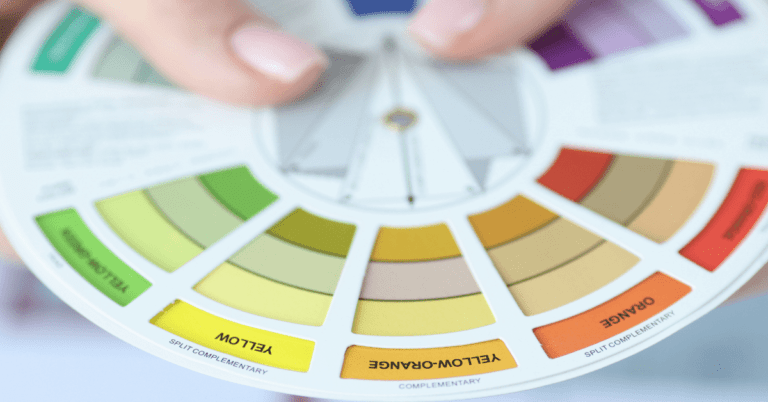

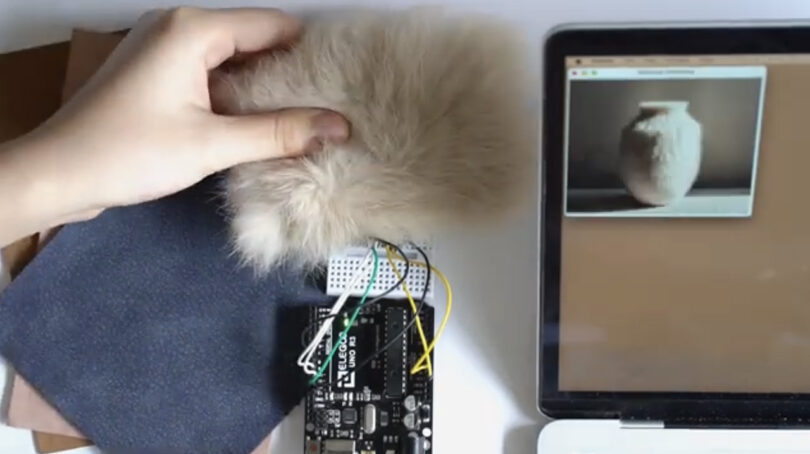

Textures and hues can be altered sampling real world swatches of color or even physical materials. Feng is shown applying a shag surface across a vase by sampling a swatch of the furry material.

Feng believes by reducing a reliance on text prompts, Promptac can also help individuals with disabilities interact with generative AI tools.

The potential becomes clearly more evident observing the Promptac in action:

[embedded content]

To see more of Zhaodi Feng’s work for the Royal College of Art Graduate Show, visit her student project submissions here.